CAREER: Coordinated Power Management in Colocation Data Centers (NSF-CNS-1551661, PI: Shaolei Ren)

Content

Overview (Blog, Slides)

As critical assets supporting the exploding information technology (IT) demands and digital

economy, data centers have been massively expanding in both number and scale, resulting in a

formidable power demand of 38GW in 2012 (a growth of 63% compared to 2011) and collectively

accounting for over 2% of global electricity usage. The rising energy price and heavy use

of carbon-intensive electricity have undeniably placed an increasing emphasis on optimizing data

center power management. While the progress is encouraging, another important type of data center – multi-tenant data center, or commonly called “colocation”/“colo” –

has been largely hidden from the public and rarely discussed (at least in research papers), although

it's very common in practice and located almost everywhere, from Silicon Valley to the gambling capital, Las Vegas.

Both growing operational costs and environmental issues are significant concerns for multi-tenant data center operators which,

if left unaddressed, is a major hurdle for sustainable growth in digital economy.

To achieve energy sustainability in colocation data centers, this project proposes

novel market-based approaches to reshape individual tenants’ power management by investigating

the following complementary research thrusts: (i) Holistically optimizing economic incentives

offered to tenants for energy saving, to limit the usage of carbon-intensive electricity;

and (ii) Enabling colocation data center demand response to minimize colocation operator's operating cost, increase the adoption of renewable energy,

and enhance the power grid reliability. In addition, we also study an emerging threat — well-timed power attacks —

and present defense mechanisms to safeguard multi-tenant colocations

data centers.

|

|

|

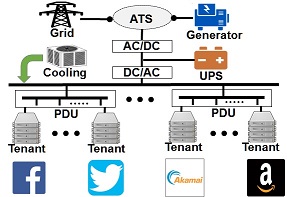

| Overview of multi-tenant data center |

Inside of Switch SuperNAP data center (from: www.supernap.com) |

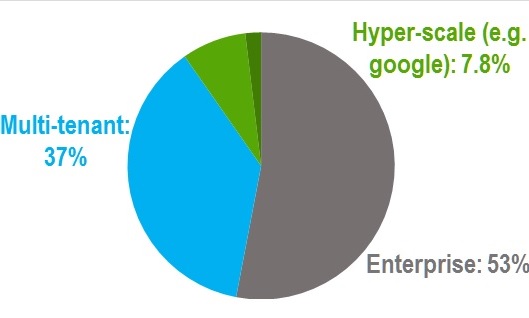

Electricity usage by data center segment (compiled based on NRDC data and excluding server closets) |

Why does multi-tenant colocation data center require new research?

What is multi-tenant data center and why does it matter?

Unlike a Google-type data center where the operator manages both IT equipment and the facility,

multi-tenant colocation data center is a shared facility where multiple tenants house their own servers

in shared space and the data center operator is mainly responsible for facility support

(like power, cooling, and space). Although the boundary is blurring, multi-tenant data centers can be generally

classified as either a wholesale data center or a retail data center: wholesale data centers (like Digital Realty) primarily

serve large tenants, each having a power demand of 500kW or more, while retail data centers (like Equinix) mostly target tenants with smaller demands.

Multi-tenant data centers serve almost all industry sectors,

including finance, energy, major web service providers, content delivery network providers,

and even some IT giants lease multi-tenant data centers to complement their own data center infrastructure.

For example, Google, Microsoft, Amazon, and eBay are all large tenants in a hyper-scale multi-tenant data center in Las Vegas,

NV, and Facebook leases a large data center in Singapore to serve its users in Asia.

Multi-tenant data centers and clouds are also closely tied.

Many public cloud providers, like SalesForce, which don't want to or can't

build their own massive data centers, lease capacities from multi-tenant data center providers.

Even the largest players in the public clouds, like Amazon, use multi-tenant data centers to quickly

expand their services, especially in regions outside the U.S. In addition, with the emergence of

hybrid cloud as the most popular option, many companies are housing the entirety of their private

clouds in multi-tenant data centers,

while large public cloud providers are forging partnership with multi-tenant data

center providers to help tenants leverage public clouds to complement their private parts.

|

|

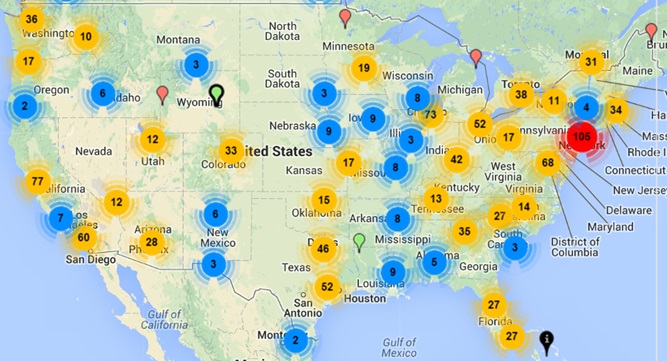

| Map of US colocation data centers (from: www.datacentermap.com) |

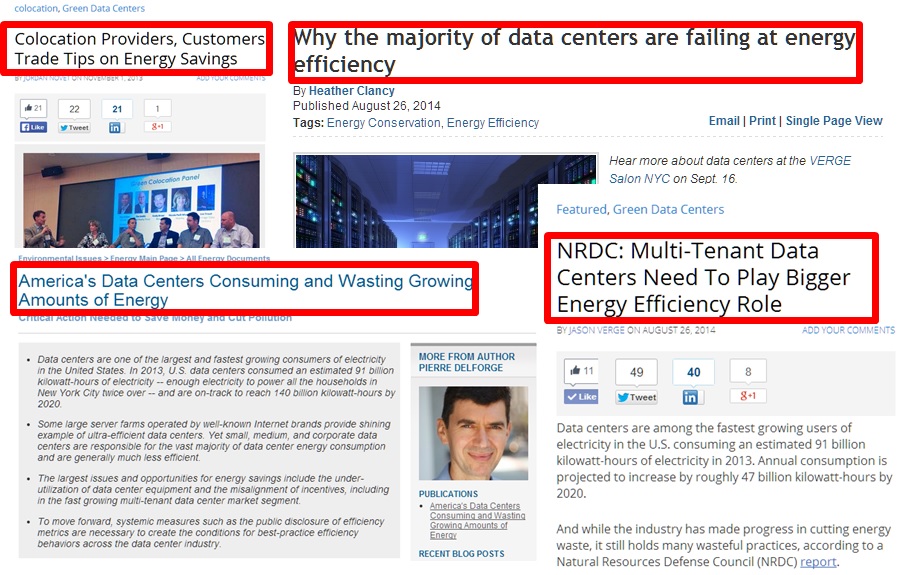

News reports on colocation data centers |

Today, the U.S. alone has over 1,400 large multi-tenant data centers, which consumed nearly as five times energy as Google-type data centers all combined (37.3% versus 7.8%, in percentage relative to all data center energy usage, excluding tiny server closets). Driven by the surging demand for web services, cloud computing, edge computing and Internet of Things, the multi-tenant data center industry is expected to continue its rapid growth, reaching tier-2 regions and markets. While the public attention mostly goes to large IT giants who continuously expand their data center infrastructure, multi-tenant data center providers are also building their own, even at a faster pace.

What are limitations of the existing research?

There has been a significant amount of research on optimizing data center operation, and we

provide a snapshot as follows. For energy efficiency, dynamically turning on/off servers has been

extensively studied to achieve “power proportionality”. In geo-distributed

data centers, geographic load balancing is an important technique to exploit location diversities for

cost minimization as well as brown energy reduction.

Thermal-aware server management and scheduling, battery management, peak power budgeting/allocation and virtual machine (VM)

resource allocation, among others, have also received tremendous attention.

More recently, data center demand response has become a trending topic to improve power grid

stability and increase adoption of intermittent renewables.

While various power management solutions have been proposed to optimize data center

operation, many of them focus on owner-operated Google-type data centers where operators have full control

over IT computing control “knobs” such as workload scheduling. Nonetheless,

multi-tenant data centers have been much less studied than Google-type data centers by the research community.

While these two types of data centers share many of the same high-level goals

like energy efficiency, utilization and renewable integration, many of the existing approaches proposed for Google-type data centers don't

apply to multi-tenant data centers, which have additional challenges due to the operator's lack of control over tenants’ servers.

Moreover, we discover that the unique physical

colocation of multiple tenants’ server systems in a shared multi-tenant data center facility can leak very important data center-level utilization information.

Such information can be exploited by attackers (i.e., malicious tenants) to compromise data center availability and causing

downtime incidents for other tenants sharing the facility.

Research Thrusts

Throughout this project, we

seek both analytical and experimental

evidences to demonstrate the effectiveness of our proposed solutions.

As colocation data centers have not received as much attention from the research community as Google-type data centers, there are a myriad of new research problems.

Maximizing Infrastructure Utilization and Performance

Accommodating the

accelerated demand, however, is costly. It can be a multimillion

or even multi-billion dollar project to construct a

new data center or expand an existing data center's capacity

(typically measured in IT critical power). Thus, data center operators

commonly oversubscribe the existing infrastructure throughout the power

hierarchy (e.g., UPS level and PDU level) by deploying more

servers than the power budget/capacity allows.

A dangerous consequence of oversubscription is the emergence

of power emergencies that bring significant challenges

for data center uptime. To address the lack of coordination among tenants to shed

power during a power emergency, we propose a novel COOrdinated

Power management solution, called COOP, that

leverages a market mechanism called supply function bidding

to

incentivize and coordinate individual tenants’ power demand

reduction.

|

|

| Illustration of spot power capacity | Overview of SpotDC to utilize spot power capacity |

While oversubscription is effective at increasing capacity utilization, data center power infrastructure is still largely under-utilized today, wasting more than 15% of the capacity on average, even in state-of-the-art data centers like Facebook. The reason this under-utilization remains is that, regardless of oversubscription, the aggregate server power demand fluctuates and does not always stay at high levels, whereas the infrastructure is provisioned to sustain a high demand in order to avoid frequent emergencies that can compromise data center reliability. Consequently, there exists a varying amount of unused power capacity, which we refer to as spot power capacity. We propose a novel market approach, called Spot Data Center capacity management (SpotDC), which leverages demand bidding and dynamically allocates spot capacity to tenants to mitigate performance degradation. Such flexible capacity provisioning complements the traditional offering of guaranteed capacity, and is aligned with the industrial trend. Crucially, SpotDC is “win-win”: tenants improve performance by 1.2-1.8x (on average) at a marginal cost increase compared to the no spot capacity case, while the operator can increase its profit by 9.7% with any capacity expansion.

M. A. Islam, X. Ren, S. Ren, and A. Wierman, “A Spot Capacity Market to Increase Power Infrastructure Utilization in Multi-Tenant Data Centers,” IEEE International Symposium on High Performance Computer Architecture (HPCA), 2018. [Slides]

M. A. Islam, X. Ren, S. Ren, A. Wierman, and X. Wang, “A Market Approach for Handling Power Emergencies in Multi-Tenant Data Center,” IEEE International Symposium on High Performance Computer Architecture (HPCA), 2016. [Slides]

Improving Energy Efficiency

|

A vast majority of the existing power management techniques require that data center operators have full control over IT computing resources. However, colocation operator lacks control over tenants’ servers; instead, tenants individually manage their own servers and workloads, without coordination with others. Furthermore, the current pricing models that colocation operator uses to charge tenants (e.g., based on power subscription) fail to align the tenants’ interests towards reducing the colocation's overall cost. |

We

propose RECO (REward for COst reduction), using financial

reward as a lever to shift power management in a colocation

from uncoordinated to coordinated. RECO pays participating

tenants for energy saving at a time-varying reward rate such that the colocation operator's overall

cost (including electricity cost and rewards to tenants) is

minimized.

RECO models time-varying

cooling efficiency based on outside ambient temperature

and predicts solar energy generation at runtime.

To tame the peak power demand charge, RECO employs a

feedback-based online optimization by dynamically updating

and keeping track of the maximum power demand as a runtime

state value. If the new (predicted) power demand exceeds the

current state value, then additional peak power demand charge

would be incurred, and the colocation operator may need to

offer a higher reward rate to incentivize more energy reduction

by tenants. RECO also encapsulates a learning module that

uses a parametric learning method to dynamically predict how

tenants respond to colocation operator's reward.

M. A. Islam, H. Mahmud, S. Ren, and X. Wang, “Paying to Save: Reducing Cost of Colocation Data Center via Rewards,” IEEE International Symposium on High Performance Computer Architecture (HPCA) 2015. [Slides]

Greening Data Center Demand Response

|

While traditionally viewed purely as a negative, the massive energy usage of data centers has recently begun to be recognized as an opportunity. In particular, because the energy usage of data centers tends to be flexible, they are promising candidates for demand response, which is a crucial tool for improving grid reliability and incorporating renewable energy into the power grid. From the grid operator's perspective, a data center's flexible power demand serves as a valuable energy buffer (or virtual battery), helping balance grid power's supply and demand at runtime. |

Data centers are important participants in emergency demand response (EDR) programs.

EDR is the most widely-adopted demand response program in the U.S., representing 87% of demand reduction capabilities

across all reliability regions. Specifically, during emergency events (e.g., extreme weather or natural

disasters), EDR coordinates many large energy consumers, including data centers, to shed their power loads, serving

as the last protection against cascading blackouts that could potentially result in economic losses of billions of dollars. The U.S. EPA has identified data centers as critical resources for EDR, which was attested to by the following

example: on July 22, 2011, hundreds of data centers participated in EDR by cutting their electricity usage before

a large-scale blackout would have occurred.

Further, as more renewables are

incorporated into the grid and result in a higher volatility

in power supply, we anticipate that EDR will be playing an

even more crucial role.

The emergence of data center demand response, albeit

highly desired, has also raised environmental concerns, because data centers typically participate by turning on backup diesel generators that are both costly and environmentally unfriendly. On-site diesel generator produces

over 50 times of NOx particles per unit energy generation

as compared to a typical coal-fired power plant. Several studies have investigated server resource

management techniques (e.g., switching off idle servers) to

modulate energy demand, as a low-cost and green alternative for data center demand response.

Compared to owner-operated data centers, multi-tenant colocations can be more

appropriate candidates for demand response, given their typical metropolitan location where demand response is mostly

needed. Nonetheless, colocation data center demand response presents

a new “split-incentive” challenge: colocation operator wants

demand response for financial compensation from the grid,

but relies on diesel generation to do so; tenants manage their

own servers without coordination and have no incentive to shed consumption for

demand response.

In this project, we propose socially optimal market mechanisms (e.g., supply function bidding and auction) to coordiante tenants’ resource

management for efficient demand response. Our study not only addresses the “split-incentive” challenge that prohibits

the wide adoption of colocation demand response, but also advances the state of the art in market mechanism design.

P. Li, J. Yang, and S. Ren, “Expert-Calibrated Learning for Online Optimization with Switching Costs,” ACM International Conference on Measurement and Modeling of Computer Systems (SIGMETRICS), 2022.

Q. Sun, S. Ren, C. Wu, and Z. Li, “An Online Incentive Mechanism for Emergency Demand Response in Geo-Distributed Colocation Data Centers,” ACM International Conference on Future Energy Systems (e-Energy, Best Paper Award), 2016.

N. Chen, X. Ren, S. Ren, and A. Wierman, “Greening Multi-Tenant Data Center Demand Response,” The 33rd IFIP WG7.3 International Symposium on Computer Performance, Modeling, Measurements and Evaluation (Performance) 2015.

L. Zhang, S. Ren, C. Wu, and Z. Li, “A Truthful Incentive Mechanism for Emergency Demand Response in Colocation Data Centers,” IEEE Conference on Computer Communications (INFOCOM) 2015.

Timing Power Attacks

Data center operators commonly oversubscribe their power infrastructure capacities to reduce the capital expense and/or increase the infrastructure utilization. Nonetheless, this practice results in occassional power emergencies: Although generally uncommon, tenants’ aggregate power demand can exceed the design power capacity (a.k.a. power emergency) when their power consumption peaks simultaneously. Power emergencies compromise infrastructure redundancy protection and can increase the outage risk by 280+ times compared to a fully-redundant case. In our project, we discover that the common practice of power infrastructure oversubscription in data centers further exposes dangerous vulnerabilities to well-timed power attacks (i.e., maliciously timed power loads to overload the infrastructure capacity), possibly creating outages and resulting in multimillion-dollar losses.

|

More specifically, although power emergencies are rare under typical operation due to statistical multiplexing of the servers’ power usage across benign tenants, a malicious tenant can invalidate the anticipated multiplexing effects by intentionally increasing its own power load up to its subscribed capacity at moments that coincide with high aggregate power demand of the benign tenants. This can greatly increase the chance of overloading the shared power capacity, thus threatening the data center uptime and damaging the operator's business image. |

In order to create severe power emergencies, the attacker must precisely time its power attacks. This may seem impossible because the attacker cannot use its full subscribed capacity continuously or too frequently, which would lead the attacker to be easily discovered and evicted due to contractual violations. Further, the attacker does not have access to the operator's power meters and does not know the aggregate power usage of benign tenants at runtime. The key idea we exploit is that the physical co-location of tenants’ servers in a shared facility means the existence of several important side channels, which leak the power usage information of benign tenants and hence assist the attacker with timing its power attacks.

Thermal side channel

|

Almost all server power is converted into heat, and some of the hot air exiting the servers may recirculate and travel a few meters to other server racks (due to the lack of heat containment in many data centers), which impacts the inlet temperature of those other racks. Heat recirculation constitutes an important side channel that the attacker can exploit to estimate the power of nearby tenants sharing the same power infrastructure. |

We propose a novel model-based approach: the attacker can build an estimated model for heat recirculation and then leverage a stateaugmented Kalman filter to extract the hidden information about benign tenants’ power usage from the observed temperatures at its server inlets. By doing so, the attacker can control the timing of its power attacks without blindly or continuously using its maximum power: attacks are only launched when the aggregate power of benign tenants is also high.

M. A. Islam, S. Ren, and A. Wierman, “Exploiting a Thermal Side Channel for Power Attacks in Multi-Tenant Data Centers,” ACM Conference on Computer and Communications Security (CCS), 2017. [Slides]

Acoustic side channel

|

We discover an acoustic side channel resulting from servers’ noise generated by cooling fans. Concretely, the key idea we exploit is that the energy of noise generated by a server's cooling fans increases with its fan speed measured in revolutions per minute (RPM), which is well correlated with the server power. Thus, through measurement of the received noise energy using microphones, an attacker can possibly infer the benign tenants’ power usage and launch well-timed power attacks, which significantly threaten the data center availability. |

We first investigate differences between servers’ fan noise and the air conditioning system's fan noise in terms of frequency characteristics, and then propose a high-pass filter that can filter out most of the air conditioning system's fan noise while preserving the acoustic side channel. Second, we propose an affine non-negative matrix factorization (NMF) technique with sparsity constraint, which helps the attacker demix its received aggregate noise energy into multiple consolidated sources, each corresponding to a group of benign server racks that tend to have correlated fan noise energy. Thus, when all or most of the consolidated sources have a relatively higher level of noise energy, it is more likely to have an attack opportunity. More importantly, noise energy demixing is achieved in a model-free manner: the attacker does not need to know any model of noise propagation. Third, we propose an attack strategy based on a finite state machine, which guides the attacker to enter the “attack” state upon detecting a prolonged high noise energy.

M. A. Islam, L. Yang, K. Ranganath, and S. Ren, “Why Some Like It Loud: Timing Power Attacks in Multi-tenant Data Centers Using an Acoustic Side Channel,” ACM International Conference on Measurement and Modeling of Computer Systems (SIGMETRICS), 2018. [Slides]

Voltage side channel

|

We find that a power factor correction (PFC) circuit is almost universally built in today's server power supply units to shape server's current draw following the sinusoidal voltage signal wave and hence improve the power factor. The PFC circuit includes a pulse-width modulation (PWM) that switches on and off at a high frequency (40–150kHz) to regulate the current. This switching operation creates high-frequency current ripples which, due to the Ohm's Law, generate voltage ripples along the power line from which the server draws current. |

Importantly, the high-frequency voltage ripple becomes more prominent as a server consumes more power and can be transmitted over the data center power line network without interferences from the nominal grid voltage frequency (50/60Hz). As a consequence, the attacker can easily sense its supplied voltage signal and extract benign tenants’ power usage information from the voltage ripples to time its power attacks.

M. A. Islam and S. Ren, “Ohm's Law in Data Centers: A Voltage Side Channel for Timing Power Attacks,” ACM Conference on Computer and Communications Security (CCS), 2018.

Selected Talks

UC Irvine (10/2017), U Oregon (03/2017), Tsinghua University (09/2017), ACACES Summer School by HiPEAC (07/2016), University of Rome “Sapienza” (07/2016), Rutgers (02/2016), Texas State U (02/2016), Penn. State U (02/2016), U Central FL (09/2015), UFL (09/2015), U South FL (09/2015), U Houston (09/2015), Virginia Tech (03/2015), NC State U (03/2015), Oak Ridge National Labs (11/2014), FL International U (09/2014), Tsinghua U (06/2014), Peking U (06/2014), Chinese Academy of Sciences (06/2014), Shanghai Jiaotong U (06/2014), CityU of HK (05/2014), CUHK (05/2014), U of HK (05/2014), UC Berkeley (05/2014), U of Alberta (05/2014)

Key Participants

Shaolei Ren (PI)

Zhihui Shao

Jianyi Yang

Fangfang Yang

Luting Yang (Now with Walmart Labs)

Mohammad A. Islam (Now with UT Arlington)

Courses

EE-252: Data Center Architecture

EE-260: Advanced Topics in Sustainable Computing (offered in Fall, 2015)

CAP-4783 (FIU): Management of Datacenter Systems

Selected Publications

Conference Proceedings

P. Li, J. Yang, and S. Ren, “Expert-Calibrated Learning for Online Optimization with Switching Costs,” ACM International Conference on Measurement and Modeling of Computer Systems (SIGMETRICS), 2022.

Z. Shao, M. A. Islam, and S. Ren, “Heat Behind the Meter: A Hidden Threat of Thermal Attacks in Edge Colocation Data Centers,” IEEE International Symposium on High-Performance Computer Architecture (HPCA), 2021.

J. Yang and S. Ren, “Bandit Learning with Predicted Context: Regret Analysis and Selective Context Query,” IEEE International Conference on Computer Communications (INFOCOM), 2021.

M. A. Islam and S. Ren, “Ohm's Law in Data Centers: A Voltage Side Channel for Timing Power Attacks,” ACM Conference on Computer and Communications Security (CCS), 2018. [Slides] [Video]

M. A. Islam, L. Yang, K. Ranganath, and S. Ren, “Why Some Like It Loud: Timing Power Attacks in Multi-tenant Data Centers Using an Acoustic Side Channel,” ACM International Conference on Measurement and Modeling of Computer Systems (SIGMETRICS), 2018. [Slides]

M. A. Islam, X. Ren, S. Ren, and A. Wierman, “A Spot Capacity Market to Increase Power Infrastructure Utilization in Multi-Tenant Data Centers,” IEEE International Symposium on High Performance Computer Architecture (HPCA), 2018. (Extended abstract appeared at ACM SIGMETRICS 2017)

W. Jiang, S. Ren, F. Liu, and H. Jin, “Non-IT Energy Accounting in Virtualized Datacenter ,” International Conference on Distributed Computing Systems (ICDCS), 2018.

M. A. Islam, S. Ren, and A. Wierman, “Exploiting a Thermal Side Channel for Power Attacks in Multi-Tenant Data Centers,” ACM Conference on Computer and Communications Security (CCS), 2017. [Slides] [Video]

M. A. Islam, S. Ren, and A. Wierman, “A First Look at Power Attacks in Multi-Tenant Data Centers,” Greenmetrics (co-located with ACM SIGMETRICS), 2017.

M. A. Islam, X. Ren, S. Ren, A. Wierman, and X. Wang, “A Market Approach for Handling Power Emergencies in Multi-Tenant Data Center,” IEEE International Symposium on High Performance Computer Architecture (HPCA), 2016. [Slides]

Q. Sun, S. Ren, C. Wu, and Z. Li, “An Online Incentive Mechanism for Emergency Demand Response in Geo-Distributed Colocation Data Centers,” ACM International Conference on Future Energy Systems (e-Energy, Best Paper Award), 2016.

M. A. Islam, H. Mahmud, S. Ren, and X. Wang, “Paying to Save: Reducing Cost of Colocation Data Center via Rewards,” IEEE International Symposium on High Performance Computer Architecture (HPCA) 2015. [Slides]

N. Chen, X. Ren, S. Ren, and A. Wierman, “Greening Multi-Tenant Data Center Demand Response,” The 33rd International Symposium on Computer Performance, Modeling, Measurements and Evaluation (IFIP WG7.3 Performance) 2015. [PDF]

L. Zhang, S. Ren, C. Wu, and Z. Li, “A Truthful Incentive Mechanism for Emergency Demand Response in Colocation Data Centers,” IEEE Conference on Computer Communications (INFOCOM) 2015. [PDF]

Q. Sun, C. Wu, S. Ren, and Z. Li, “Rewarding in Colocation Data Center: Truthful Mechanism for Emergency Demand Response,” IEEE/ACM International Symposium on Quality of Service (IWQoS), 2015. [PDF]

S. Ren and M. A. Islam, “Colocation Demand Response: Why Do I Turn Off My Servers?” USENIX International Conference on Autonomic Computing (ICAC), 2014.

S. Ren and M. A. Islam, “A First Look at Colocation Demand Response” Greenmetrics (co-located with ACM SIGMETRICS), 2014.

Journal Articles

S. Ren, “Managing Power Capacity as a First-Class Resource in Multi-Tenant Data Centers,” IEEE Internet Computing (special issue on “Energy-Efficient Data Centers”), vol. 21, no. 4, pp. 8-14, July 2017.

Q. Sun, S. Ren, and C. Wu, “Fair Online Power Capping for Emergency Handling in Multi-Tenant Cloud Data Centers,” IEEE Transactions on Cloud Computing, vol. pp, no. 99, 2017.

M. A. Islam, H. Mahmud, S. Ren, and X. Wang, “A Carbon-Aware Incentive Mechanism for Greening Colocation Data Centers,” IEEE Transactions on Cloud Computing, vol. pp, no. 99, 2017.

Q. Sun, C. Wu, Z. Li, and S. Ren, “Colocation Demand Response: Joint Online Mechanisms for Individual Utility and Social Welfare Maximization,” IEEE Journal on Selected Areas in Communications, vol. 34, no. 12, pp. 3978-3992, Dec. 2016.

N. H. Tran, T. Z. Oo, S. Ren, Z. Han, E.-N. Huh, and C.-S. Hong, “Reward-to-Reduce: An Incentive Mechanism for Economic Demand Response of Colocation Datacenters,” IEEE Journal on Selected Areas in Communications, vol. 34, no. 12, pp. 3941-3953, Dec. 2016.

L. Zhang, S. Ren, C. Wu, and Z. Li, “A Truthful Incentive Mechanism for Emergency Demand Response in Geo-distributed Colocation Data Centers”, ACM Transactions on Modeling and Performance Evaluation of Computing Systems, vol. 1, no. 4, Sep. 2016.

L. Zhang, Z. Li, C. Wu, and S. Ren, “Online Electricity Cost Saving Algorithms for Co-Location Data Centers,” IEEE Journal on Selected Areas in Communications (Special Issue on “Green Communications and Networking”), vol. 33, no. 12, pp. 2906-2919, Dec. 2015.

N. H. Tran, C. T. Do, S. Ren, Z. Han, and C-S. Hong “Incentive Mechanisms for Economic and Emergency Demand Responses of Colocation Datacenters,” IEEE Journal on Selected Areas in Communications (Special Issue on “Green Communications and Networking”), vol. 33, no. 12, pp. 2892-2905, Dec. 2015.

Acknowledgement

This project is supported in part by the U.S. NSF Faculty Early Career Development (CAREER) Program under the grant CNS-1551661 .

Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the NSF.