Collaborative Research: CNS Core: Small: Towards Automated and QoE-driven Machine Learning Model Selection for Edge Inference

(NSF-CNS-2007115 and NSF-CNS-2006630)

Overview

Edge devices, such as mobile phones, drones and robots, have been emerging as an increasingly more important platform for deep neural network (DNN) inference. For an edge device, selecting an optimal DNN model out of many possibilities is crucial for maximizing the user’s quality of experience (QoE), but this is significantly challenged by the high degree of heterogeneity in edge devices and constant-changing usage scenarios. The current practice commonly selects a single DNN model for many or all edge devices, which can only provide a satisfactory QoE for a small fraction of users at best. Alternatively, device-specific DNN model optimization is time-consuming and not scalable to a large diversity of edge devices. Moreover, the existing approaches focus on optimizing a certain objective metric for edge inference, which may not translate into improvement of the actual QoE for users. By leveraging the predictive power of machine learning and keeping users in a loop, this project proposes an automated and scalable device-level DNN model selection engine for QoE-optimal edge inference. Specifically, this project includes two thrusts: first, it exploits online learning to predict QoE for each edge device, automating deployment-stage DNN model selection; and second, it builds a runtime QoE predictor and automatically selects an optimal DNN model given runtime contextual information.

This project represents an important departure from and an essential complement to the current practices in DNN model optimization. It can bring the benefits of DNN-enabled intelligence to many more resource-constrained edge devices with an optimal QoE. Additionally, it provides novel observations, insights and principles for edge inference, catalyzing the transformation of the design of DNN models into a new user-centric paradigm. This project also enables new opportunities to improve curriculum design and attract students, especially under-represented minorities, to engage in science, technology, engineering, and mathematics fields.

Challenges

Along with advances in embedded and mobile hardware, the recent breakthroughs on DNN model compression (e.g., network pruning and weight quantization) have significantly reduced model sizes by orders of magnitude with an acceptable accuracy loss, successfully turning edge inference into reality. Naturally, to run inference on resource-constrained edge devices with a satisfactory user experience (which we refer to as quality of experience, or QoE), inference accuracy is not the sole metric to optimize; instead, the employed DNN model architecture must be, in an automated manner, tailored to specific edge device hardware and also adapted to users's input data sets at runtime, striking an optimal balance among various important metrics such as accuracy, latency and energy consumption. Nonetheless, this is challenged by the extremely high degree of heterogeneity for edge inference in terms of the underlying device hardware, usage scenarios and DNN models.

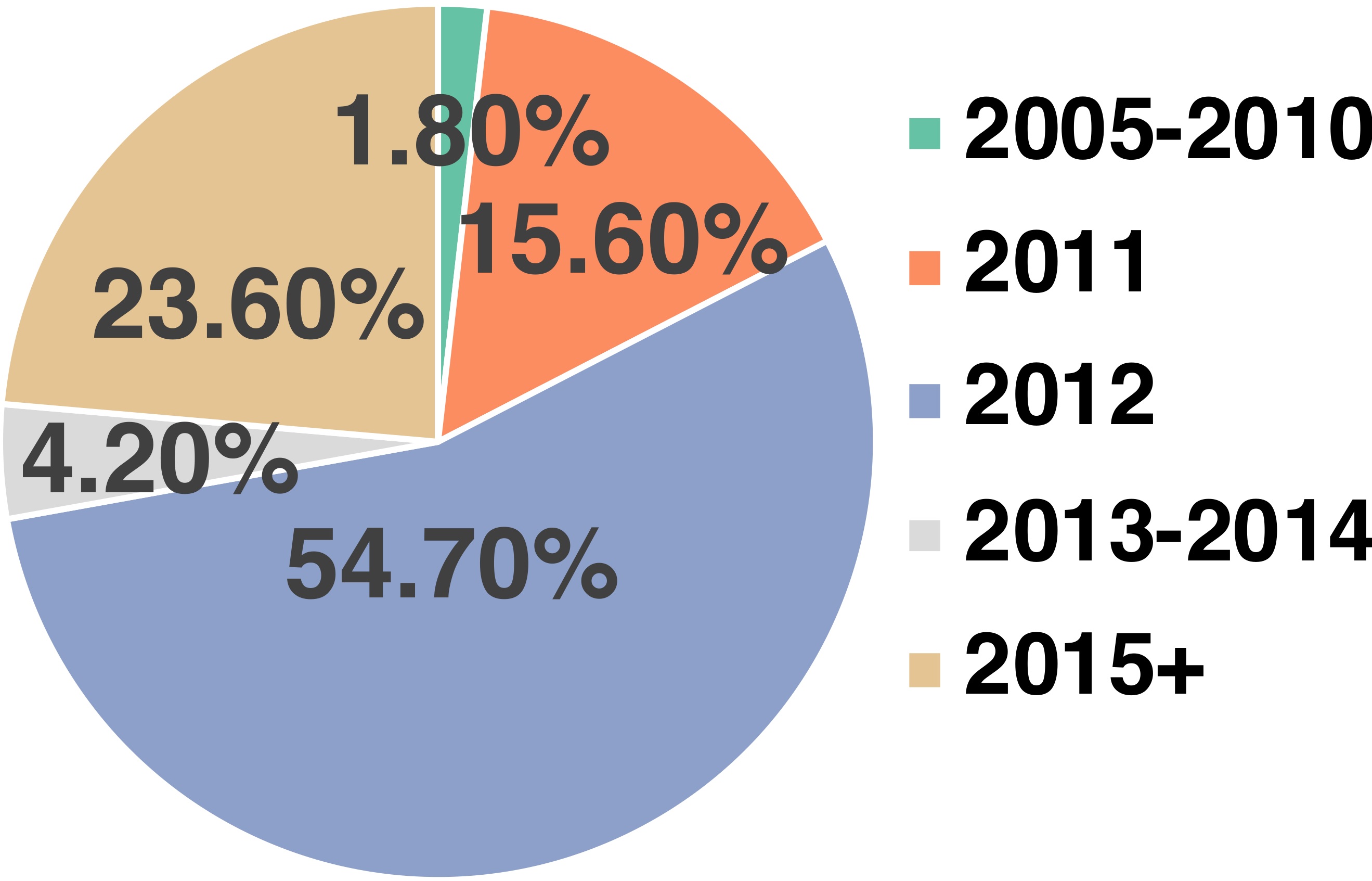

Edge device heterogeneity. Take edge inference on mobile platforms as an example. Mobile devices have extremely diverse computing and memory capabilities: some high-end devices have state-of-the-art CPUs along with dedicated graphic processing units (GPUs) and even purpose-built accelerators to speed up inference, whereas many others are powered by CPUs of several years old.

|

|

Usage scenario heterogeneity. DNN models run on different edge devices can be exposed to significantly different usage scenarios: different locations, illumination conditions, temperature, etc. These all account to drastically different distributions of users’ input data sets, which can result in very different inference accuracies even on the same DNN model. Moreover, inference latency can differ significantly even across devices with the same configuration, due to systems statuses (e.g., number of concurrent processes running, battery conditions, etc). Last but not least, users have different preferences towards different metrics: some users are more energy-sensitive due to limited battery capacities, whereas others like to trade energy consumption for latency.

DNN model heterogeneity. The recent studies have proposed various DNN model compression techniques, such as network pruning, weight quantization, low-rank matrix approximation, and knowledge distillation. As a consequence, even with the same training data set but given different compression techniques or optimization parameters, hundreds of or even more different DNN models can be generated using neural architecture search techniques. While many of the resulting lightweight DNN models can be deployed on a target edge device, they can exhibit very different tradeoffs in a multi-dimension space of important metrics (e.g., accuracy vs. latency vs. energy), and no single model can achieve optimality in all the dimensions.

Consequently, in view of extremely heterogeneous edge devices, usage scenarios and DNN models, a new challenge arises: how to automatically select DNN models for edge inference with optimal QoE?

Principal Investigators

Shaolei Ren (PI at UC Riverside)

Jie Xu (PI at Univeristy of Miami)

Selected Publications

B. Lu, J. Yang, J. Xu, and S. Ren, “Improving QoE of Deep Neural Network Inference on Edge Devices: A Bandit Approach,” IEEE Internet of Things Journal, Jun. 2022.

Y. Bai, L. Chen, S. Ren, and J. Xu, “Automated Customization of On-Device Inference for Quality-of-Experience Enhancement,” IEEE Transactions on Computers, Sep. 2022.

Y. Bai, L. Chen, L. Zhang, M. Abdel-Mottaleb, J. Xu, “Adaptive Deep Neural Network Ensemble for Inference-as-a-Service on Edge Computing Platforms,” IEEE International Conference on Mobile Ad-Hoc and Smart Systems (MASS), 2021.

Y. Bai, L. Chen, M. Abdel-Mottaleb, J. Xu, “Automated Ensemble for Deep Learning Inference on Edge Computing Platforms,” IEEE Internet of Things Journal, vol. 9, no. 6, 2022.

Acknowledgement

This project is supported in part by the U.S. NSF under the grant CNS-2007115 and CNS-2006630.

Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the NSF.